This short article outlines the ethics of using AI (mainly generative AI, or large language models), as of June 2025. In short, there exists no truly ethical way to engage with AI currently. This article will outline a few of the ways that support this conclusion.

Copyright

Quite a lot to unpick here, but the crux of it is that the way that LLMs are “trained” is by shovelling data into the neural network to form the AI’s model (basically its “brain”). The more data, the more useful this becomes, so the tech companies quickly realised that they needed all the data. This led to suspicions, later found to be true, that copyrighted material was being pirated and consumed too. In Meta’s case, that amounted to 82Tb of books and publications. In book terms, that’s tens of millions of publications, illegally downloaded from pirate site LibGen via BitTorrent. Meta’s engineers joked that “Torrenting from a corporate laptop doesn’t feel right”, but, you know, did it anyway.

It’s not just books. Images are also consumed in the model training process, leading Disney and Universal to sue MidJourney.

Code was also consumed en masse. Open Source projects will typically upload their code in full, using restrictive licenses to ensure that proper attribution takes place. However, the tech companies consumed it all, and will happily recreate snippets of code based on what they took, completely disregarding the license.

In short, nothing is safe. If it existed on the internet in any form, it was shovelled into the model with no attribution, never mind compensation.

Driving nuclear power

While we know that AI interactions consume vast amounts of power, the scale of it was relatively unknown until recently. However, Meta made headlines in June by striking a deal in Illinois to keep a nuclear reactor online for the next two decades, instead of closing in 2027.

That deal echoes similar moves by Google in California and Microsoft’s deal at the end of last year to re-open the Three Mile Island reactor in 2028, a reactor famous for its 1979 meltdown.

At a time when the world is looking for greener energy and an answer to the climate crisis, AI is powering big tech companies against the flow, encouraging vast, wide-scale power consumption, driving huge investment in non-renewable, polluting energy.

AI Makes you Dumb

MIT researchers in June this year conducted a study that found that using AI (in a certain way) will make you dumb(er). To put it in a less antagonistic way, if you start your creative process by using AI, you will reshape your brain’s function to be less creative, compared to when you start your creative process without it, and perhaps only use it at the end to tidy up the results.

This finding relates to the evaluation of students writing an essay, and at what point in that process they engage with AI. Those starting with AI felt disassociated from their work, and 80% of these students couldn’t quote from their submission at all. Meanwhile, those who started without AI felt like they took ownership of the result, even if later tweaked by AI, and 80% of those students could quote from their work.

The paper also notes a tendency towards “linguistically bland” output from those starting with AI, which suggests to me that we’re in a race to the bottom. This is especially true if future models are trained on the internet as it is today, leading to homogenisation, where biases are reinforced, creativity stifled and mistakes compounded.

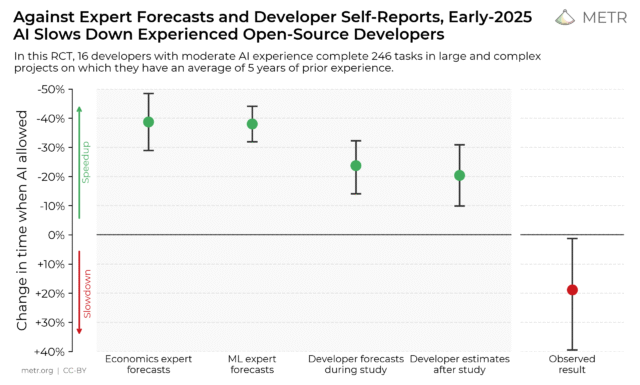

At least developers benefit from all that coding advice and boilerplate code generation! Oh wait, no they don’t, a study has found recently. In fact, instead of any increase in productivity, the study found that (experienced) developers were 20% LESS productive, as they found errors in the AI-generated code, or simply had to peer review obscure code before it was fit for purpose.

What’s incredible about this study is that economists thought it would result in a circa 40% increase, and AI experts expected a circa 35% increase. Even during the study, developers thought they would be around 20% more productive even after the study was complete! But results showed the opposite.

AI Slop

The rise of AI has led to a corresponding rise of easily-accessible tools to create social media content. This has led in turn to an enormous uptick in what’s being called “AI Slop”, a term to capture this kind of easily-produced, often professional-looking, but frequently weird or simply misleading content.

AI Slop is often eye-catching, but Meta has doubled-down on AI content and has changed their algorithms (on Meta-owned sites, such as Facebook, Instagram or Threads), encouraging the use of these tools, monetising viral content. This could be images, video, or songs.

Aside from the deterioration of social media feeds, the bigger concern is when these tools are used to mislead, particularly when the content is political.

Transparency

Related to AI Slop, the realism of certain content often makes it difficult to know that it’s generated by AI. An image or even video can be so realistic that it looks authentic, which creates the risk of disinformation for political or personal gain. We need an equivalent to the padlock we used to see on websites that indicated that they were secure. This missing checkmark of authenticity actually exists already but isn’t in widespread use.

This can also be a difficult question to answer for prose. How much is too much AI? If an author creates their novel and then edits it using AI, is it now a “work of AI”? How about if only 20 paragraphs were rephrased by using AI tools, is that a work of AI? Publisher Faber now include “Human Written” in their works as a result of pushback from readers concerned by the use of AI tools. This will be an ever-evolving debate, I suspect.

And the rest

There’s honestly too many other issues with AI to cover without turning this article into a monster. This excellent entry on Wikipedia covers a wide variety of additional concerns, including the very real, very negative impact AI scraping has had on Wikipedia itself!

Hopefully the five primary areas I covered will give you food for thought the next time you consider whether to engage with an AI tool, let alone pay £20/month (or £200/month!) for a subscription.